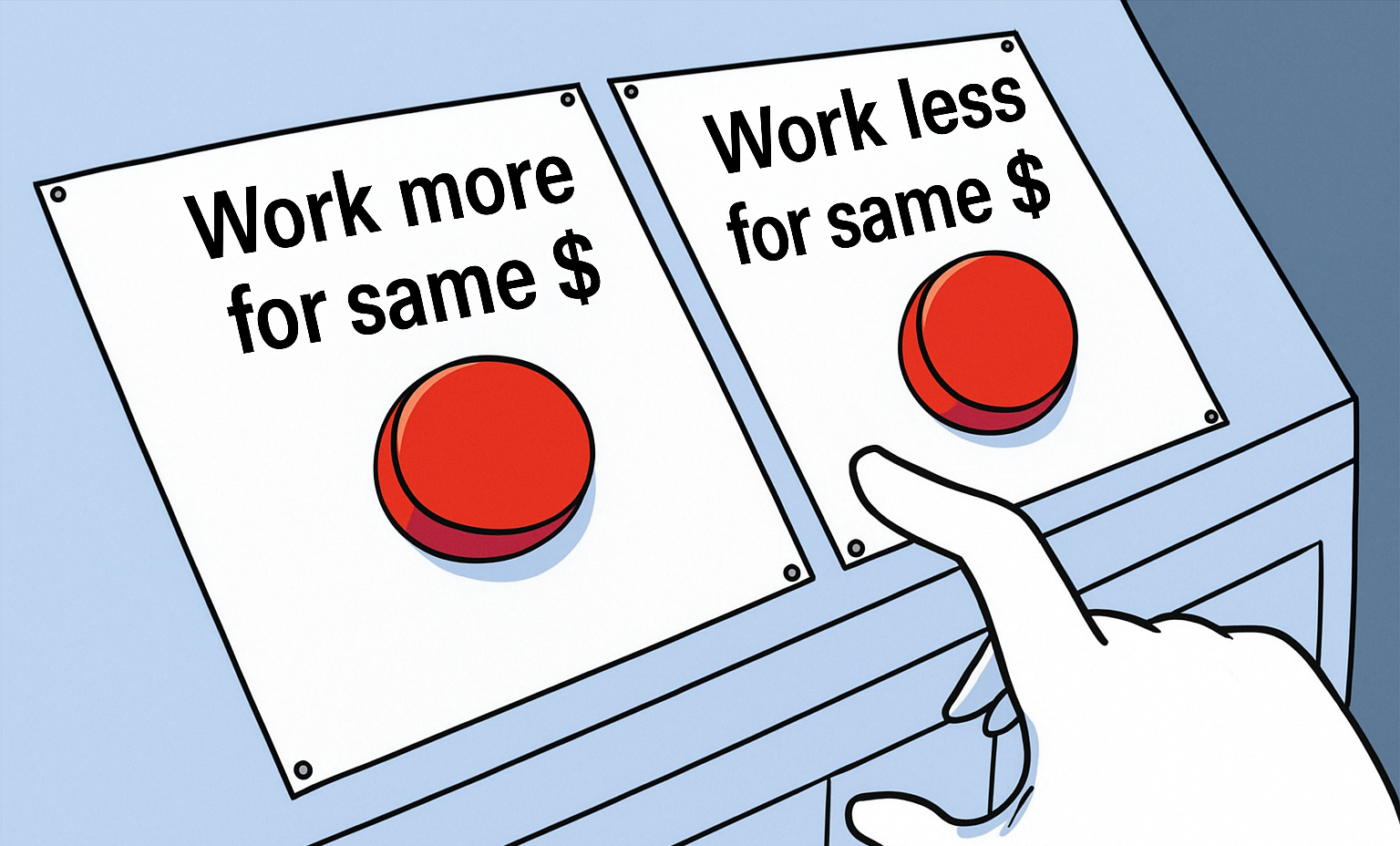

Figure 1: The modern programmer's dilemma

Today I realized that – distinct from the idea of AI misalignment – today's human resource departments have misaligned incentives for how employees will (or are?) using large language models.

Maybe you're like me: an employee – not an employer – and so you trade your time and effort for money. Maybe you've also gotten better at using large language models and they've made you noticeably more productive. In fact (I agree with Thomas here) I can leverage them to make myself significantly more productive.1

Here's Bobby knowledge worker: he's found a way to increase his output by 30%! Maybe even 50%!

Now we come to the biased part of this piece of writing, but it's drawn from years of being an employee, and maybe your experience is the same as mine:

- If I put in significantly less work than I normally do, I probably get fired. Modern management is pretty inefficient, but they notice when work stops happening.

- If I put in significantly more work than I normally do, I probably get more work assigned to me but in the entire course of my own career and most other knowledge workers I talk to, you'll never get an equivalent increase in compensation.

Bobby knowledge worker, armed with his drastically increased efficiency has two choices he can make:

- Work as hard as he has been working previously, increase productivity, and – here's the personal, biased prediction – not realize any material gain from his employer for it.

- Work less – become lazy! – and remain as productive as he was previously, losing nothing in compensation, but read fiction recreationally, watch YouTube, and take naps.

Which would you rather do?

Commentary

You're usually not supposed to try and pre-emptively address rebuttals to your argument, but I can't help myself here:

This is immoral!

Yes, I know. I'm not advocating for it, I'm describing the impending moral decision that many knowledge workers will likely have to encounter soon.

UCLA graduate celebrates by showing off the ChatGPT he used for his final projects right before officially graduating 😭 pic.twitter.com/hZAvrY1fJk

— FearBuck (@FearedBuck) June 18, 2025

Employees will just fall behind their peers!

Eventually. Once the rising tide of LLM assistance permeates everywhere then the productivity increases will invoke a reckoning between how different people decide to handle it.

But organizations are chronically slow, and despite the radiating influence of AI buzzwords across nearly every space, this is window dressing signalling and emphatically not a part of a very well-developed way to integrate and measure their effectiveness. Sorry, most organizations are fumbling around in the dark above a certain competence requirement level.

These productivity claims are lies!

Maybe. You can choose to believe or disbelieve my claims about my own experiences with AI, but you can find numerous examples of very competent, publicly-visible engineers vouching for the legitimacy and effectiveness of AI in their own workflows. I'd refer here to Mitchell Hashimoto, Jessie Frazelle, and Simon Willison as examples of highly experienced engineers actively doing high-quality work who are open about their positive leverage of LLM tools.

After writing this piece, Xe wrote a post that includes strong arguments that although LLMs might help you produce more, it may be of less quality. I don't have enough exposure to LLM-generated code to have a strong opinion here, but it's a strong argument.

HR departments will adapt!

Delivering business value will trickle down!

I intentionally used the terms "employee" versus "employer" in the second sentence introduction. LLM productivity is a massive boon if you can trade a shiny feature for a big check you can take a cut from. That equation isn't equivalent if you're not in control of that business process.

I'm telling your boss!

Personally? I'm navigating this thought experiment with moderate use of code-generation tools and I'm not a time thief yet.

The market will correct this disparity!

Ideally: yes. Employees will leverage their ability into be worth more to find employers who will compensate that increased ability.

In reality, I think this will happen:

- Employment is sticky. I don't see people jumping ship constantly to achieve optimal compensation equilibrium.

- This is already overwhelmingly the case because most people can get a significant pay increase by securing more lucrative offers outside of their own labyrinthine promotion cycles. Big shifts in productive output just exacerbates the problem.

My argument falls apart if HR departments see more productive workers and give them all raises. But I doubt that happens.

Footnotes:

None of this blog post was written with AI/LLMs.